Computer Art Blog Category Index

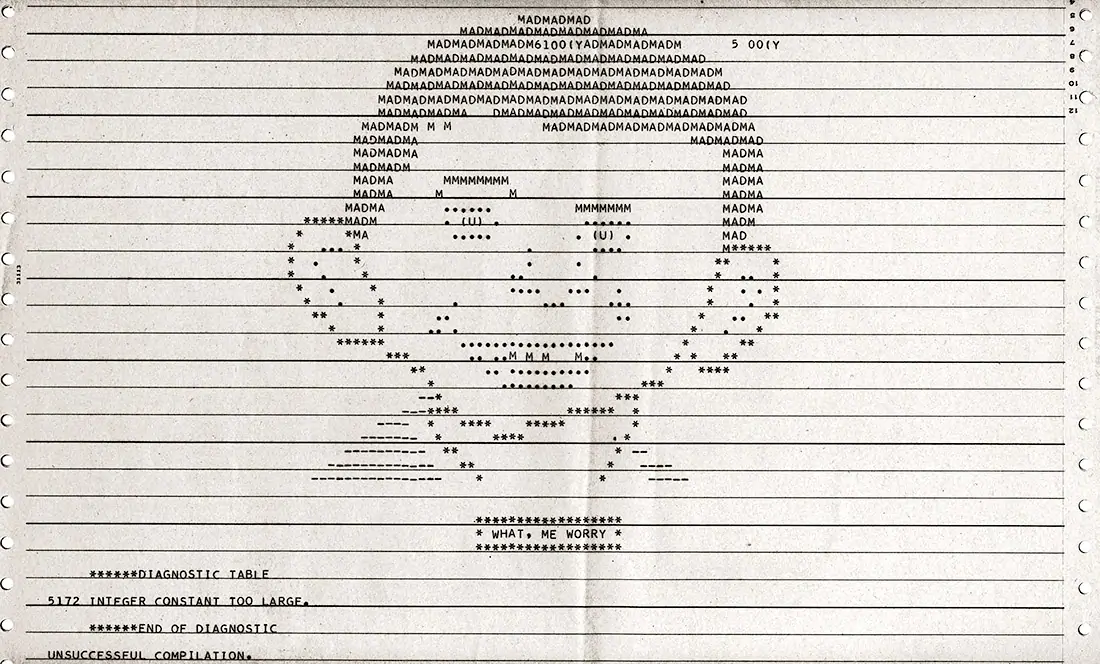

I have to say I am fascinated by the capabilities of computers (that's hardware and software) to be used to create works of visual, musical, and textual art. While my professional career was dedicated to creating functional programs in a business environment to solve business problems, including having invented a scripting language while at IBM, I have always been fascinated by the continued advances in creative software and how far we've advanced since the days of ASCII art.

Following are blog posts which I have placed in the computer art category.